In this blog, I will return to the issue of the environmental cost of AI (September 3rd and 10th blogs) that I wrote about in response to Sonya Landau’s question. The technology is changing fast and is being compared to some of the foundational documents of the United States. Below is an attempt to compare it to the Federalist Papers:

In the late 1780s, shortly after the Industrial Revolution had begun, Alexander Hamilton, James Madison and John Jay wrote a series of 85 spirited essays, collectively known as the Federalist Papers. They argued for ratification of the Constitution and an American system of checks and balances to keep power-hungry “factions” in check.

A new project, orchestrated by Stanford University and published on Tuesday, is inspired by the Federalist Papers and contends that today is a broadly similar historical moment of economic and political upheaval that calls for a rethinking of society’s institutional arrangements.

In an introduction to its collection of 12 essays, called the Digitalist Papers, the editors overseeing the project, including Erik Brynjolfsson, director of the Stanford Digital Economy Lab, and Condoleezza Rice, secretary of state in the George W. Bush administration and director of the Hoover Institution, identify their overarching concern.

“A powerful new technology, artificial intelligence,” they write, “explodes onto the scene and threatens to transform, for better or worse, all legacy social institutions.”

Of course, anything this big and important needs guidelines. NIST (National Institute of Standards and Technology), the US federal agency responsible for establishing standards, produced some:

Under the October 30, 2023, Presidential Executive Order, NIST developed a plan for global engagement on promoting and developing AI standards. The goal is to drive the development and implementation of AI-related consensus standards, cooperation and coordination, and information sharing. Reflecting public and private sector input, on April 29, 2024, NIST released a draft plan. On July 26, 2024, after considering public comments on the draft, NIST released A Plan for Global Engagement on AI Standards (NIST AI 100-5). More information is available here.

The World Economic Forum has the most complete description that I could find of the excessive energy needs of AI technology in its present state. It also shows proposals for how to address these needs in the near, intermediate, and long-term future:

AI and energy demand

Remarkably, the computational power required for sustaining AI’s rise is doubling roughly every 100 days. To achieve a tenfold improvement in AI model efficiency, the computational power demand could surge by up to 10,000 times. The energy required to run AI tasks is already accelerating with an annual growth rate between 26% and 36%. This means by 2028, AI could be using more power than the entire country of Iceland used in 2021.

The AI lifecycle impacts the environment in two key stages: the training phase and the inference phase. In the training phase, models learn and develop by digesting vast amounts of data. Once trained, they step into the inference phase, where they’re applied to solve real-world problems. At present, the environmental footprint is split, with training responsible for about 20% and inference taking up the lion’s share at 80%. As AI models gain traction across diverse sectors, the need for inference and its environmental footprint will escalate.

To align the rapid progress of AI with the imperative of environmental sustainability, a meticulously planned strategy is essential. This encompasses immediate and near-term actions while also laying the groundwork for long-term sustainability.

The long-term: AI and quantum computing

In the long term, fostering synergy between AI and burgeoning quantum technologies is a vital strategy for steering AI towards sustainable development. In contrast to traditional computing, where energy consumption escalates with increased computational demand, quantum computing exhibits a linear relationship between computational power and energy usage. Further, quantum technology holds the potential of transforming AI by making models more compact, enhancing their learning efficiency and improving their overall functionality — all without the substantial energy footprint that has become a concerning norm in the industry.

Realizing this potential necessitates a collective endeavor involving government support, industry investment, academic research and public engagement. By amalgamating these elements, it is possible to envisage and establish a future where advancement in AI proceeds in harmony with the preservation of the planet’s health.

As we stand at the intersection of technological innovation and environmental responsibility, the path forward is clear. It calls for a collective endeavor to embrace and drive the integration of sustainability into the heart of AI development. The future of our planet hinges on this pivotal alignment. We must act decisively and collaboratively.

Presently, the enormous power needs of AI, combined with the simultaneous need for an energy transition away from fossil fuels, is forcing companies to look to nuclear power. The most eye-catching move has been Microsoft’s plan to resurrect the Three Mile Island plant:

In a striking sign of renewed interest in nuclear power, Constellation Energy said on Friday that it plans to reopen the shuttered Three Mile Island nuclear plant in Pennsylvania, the site of the worst reactor accident in United States history.

Three Mile Island became shorthand for the risks posed by nuclear energy after one of the plant’s two reactors partly melted down in 1979. The other reactor kept operating safely for decades until finally closing, for economic reasons, five years ago.

Now a revival is at hand. Microsoft, which needs tremendous amounts of electricity for its growing fleet of data centers, has agreed to buy as much power as it can from the plant for 20 years. Constellation plans to spend $1.6 billion to refurbish the reactor that recently closed and restart it by 2028, pending regulatory approval.

To those of you too young to know what this turn signifies, the Wikipedia entry for Three Mile Island might help. I was never an enthusiast for making nuclear energy a strong component of the energy transition (see the November 11th and 18th posts from 2014 and the strong responses that these blogs provoked). My main reason was that global reliance on nuclear power would open the door to a much wider spread of nuclear power for military use. While they’re still unresolved, other objections—such as what to do with the radioactive waste produced—could be remediated with further research; the proximity to military applications could not. By all accounts, the primary objective of the energy transition is to convert electricity production to sustainable sources. So, even without resorting to nuclear energy, the amplified need for electricity to run AI is not an insurmountable problem.

However, the last paragraph of the World Economic Forum piece that discussed the long-term prospects of AI is the real key to the environmental impact. The technology for this scenario is quantum computing, which would generate the needed power to train and run the AI.

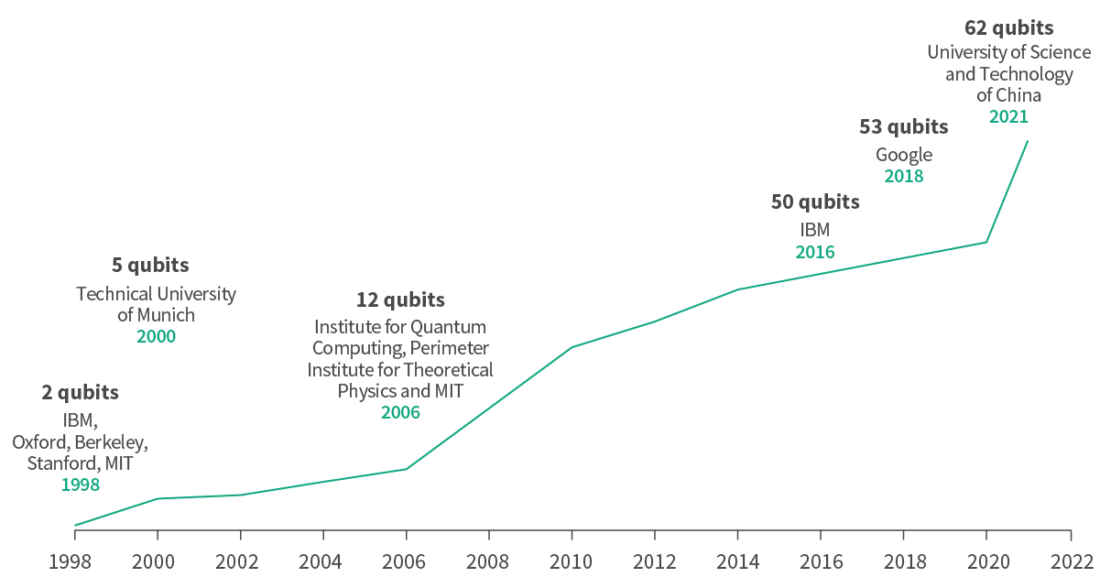

I have hardly talked about this technology before (one exception is the February 6, 2018 blog) but I will expand on it in future blogs. Those who want to know more right now can look at Amazon’s explanation. One short paragraph exposes us to the key concept of the qubits shown in Figure 1. The article defines them in the following way:

Quantum bits, or qubits, are represented by quantum particles. The manipulation of qubits by control devices is at the core of a quantum computer’s processing power. Qubits in quantum computers are analogous to bits in classical computers. At its core, a classical machine’s processor does all its work by manipulating bits. Similarly, the quantum processor does all its work by processing qubits.

Figure 1 – Progress of quantum computing over the last 20 years (Source: Aviva)

The graph in Figure 1 shows the universal preoccupation and the progress that has been made in this technology over the last 20 years. Estimates are that quantum computing will enter commercial applications toward the end of the decade but estimates can be wrong!

Based on today’s understanding of the technology, quantum computing will revolutionize the energy efficiency of computing:

Today, quantum computers’ electricity usage is orders of magnitude much less than any supercomputer, and this is counting all the different quantum architectures available. Let’s take for example superconducting qubits, the most expensive architecture, and these computers only consume about 25 kW. That amounts to 600 kWh daily, a thousand times less than the Frontier supercomputer. Much less is the consumption of neutral atoms quantum devices, such as PASQAL’s, which amount up to 7 kW.

Understanding this technology requires serious prerequisites. In future blogs, I will try to fill these knowledge voids.